Introduction

Docker containers have revolutionized application deployment, but oversized images can significantly impact performance, costs, and deployment times. A well-optimized Docker image can mean the difference between a 1GB bloated container and a 50MB streamlined deployment. This comprehensive guide explores proven strategies to reduce Docker image size while maximizing performance, based on industry best practices and real-world implementations.

Why Docker Image Optimization Matters

Optimizing Docker images delivers tangible benefits across your entire development and deployment pipeline:

- Faster Build Times: Smaller base images and efficient layers reduce overall build duration

- Reduced Storage Costs: Less disk space consumption across development, staging, and production environments

- Quicker Container Startup: Smaller images download and extract faster, enabling rapid scaling

- Enhanced Security: Minimal attack surface by removing unnecessary components and dependencies

- Improved CI/CD Performance: Faster image transfers between registry, build systems, and deployment targets

- Better Resource Utilization: Reduced memory pressure and network bandwidth consumption

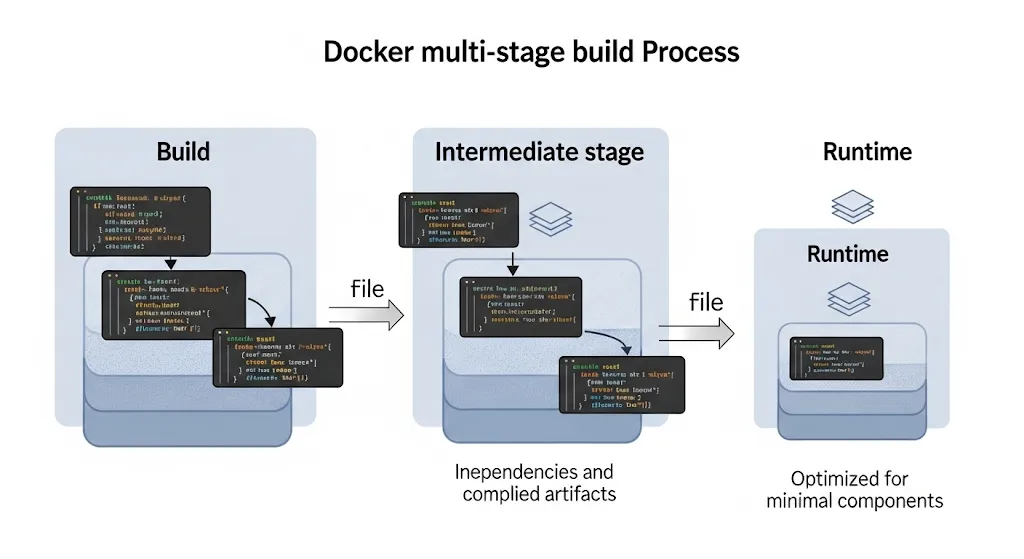

Multi-Stage Builds: The Foundation of Optimization

Multi-stage builds represent one of the most powerful optimization techniques, allowing you to separate build-time dependencies from runtime requirements.

Basic Multi-Stage Structure

# Build stage

FROM node:18-alpine AS builder

WORKDIR /app

COPY package*.json ./

RUN npm ci --only=production && npm cache clean --force

# Runtime stage

FROM node:18-alpine AS runtime

WORKDIR /app

COPY --from=builder /app/node_modules ./node_modules

COPY . .

EXPOSE 3000

CMD ["npm", "start"]Advanced Multi-Stage Optimization

For applications requiring compilation, consider this optimized approach:

# Dependencies stage

FROM golang:1.21-alpine AS deps

RUN apk add --no-cache git ca-certificates

WORKDIR /build

COPY go.mod go.sum ./

RUN go mod download

# Build stage

FROM deps AS builder

COPY . .

RUN CGO_ENABLED=0 GOOS=linux go build -ldflags="-w -s" -o app

# Final stage

FROM alpine:latest

RUN apk --no-cache add ca-certificates

COPY --from=builder /build/app /usr/local/bin/app

ENTRYPOINT ["/usr/local/bin/app"]Choosing Optimal Base Images

Base image selection significantly impacts final image size and security posture. Here’s a comprehensive comparison:

Size Comparison by Base Image

- Alpine Linux: ~3MB (minimal, musl-based)

- Distroless: ~20MB (Google’s minimal images)

- Debian Slim: ~30MB (Debian with minimal packages)

- Ubuntu: ~50MB (full-featured, larger footprint)

- Standard distributions: 100MB+ (include development tools)

Alpine Linux Optimization Example

# Before: Standard Node.js image (~300MB)

FROM node:18

# After: Alpine-based optimization (~50MB)

FROM node:18-alpine

RUN apk add --no-cache \

python3 \

make \

g++ \

&& apk del make g++Layer Optimization Strategies

Docker’s layered filesystem requires careful command structuring to minimize image size and maximize cache efficiency.

Consolidating RUN Commands

Inefficient approach (creates multiple layers):

RUN apt-get update

RUN apt-get install -y curl

RUN apt-get install -y git

RUN apt-get clean

RUN rm -rf /var/lib/apt/lists/*Optimized approach (single layer):

RUN apt-get update && \

apt-get install -y --no-install-recommends \

curl \

git && \

apt-get clean && \

rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*Efficient Package Management

Different package managers require specific optimization flags:

# Debian/Ubuntu

RUN apt-get update && apt-get install -y --no-install-recommends \

package-name && \

apt-get clean && \

rm -rf /var/lib/apt/lists/*

# Alpine

RUN apk add --no-cache package-name

# Python packages

RUN pip install --no-cache-dir package-name

# Node.js packages

RUN npm ci && npm cache clean --forceAdvanced Image Slimming Techniques

Using .dockerignore Files

Prevent unnecessary files from entering the build context:

# .dockerignore

.git

.gitignore

README.md

Dockerfile

.dockerignore

node_modules

npm-debug.log

.nyc_output

.coverage

.cache

.env.local

.vscode

*.md

tests/

docs/Avoiding Ownership Changes

Inefficient (duplicates file layers):

COPY app.jar /app.jar

RUN chown -R appuser:appuser /app.jarOptimized (single layer):

COPY --chown=appuser:appuser app.jar /app.jarRemoving Development Dependencies

# Install, use, and remove in single layer

RUN apt-get update && \

apt-get install -y --no-install-recommends \

build-essential \

python3-dev && \

pip install --no-cache-dir -r requirements.txt && \

apt-get purge -y build-essential python3-dev && \

apt-get autoremove -y && \

rm -rf /var/lib/apt/lists/*Security Best Practices for Optimized Images

Non-Root User Implementation

FROM alpine:latest

# Create non-root user

RUN addgroup -g 1001 -S nodejs && \

adduser -S nodejs -u 1001

# Set working directory and ownership

WORKDIR /app

COPY --chown=nodejs:nodejs . .

# Switch to non-root user

USER nodejs

EXPOSE 3000

CMD ["node", "server.js"]Security Scanning Integration

# Scan for vulnerabilities

docker run --rm -v /var/run/docker.sock:/var/run/docker.sock \

-v $(pwd):/tmp/.cache/ \

aquasec/trivy:latest image your-image:tag

# Use distroless for minimal attack surface

FROM gcr.io/distroless/nodejs18-debian11:latest

COPY --from=builder /app /app

WORKDIR /app

EXPOSE 3000

ENTRYPOINT ["node", "server.js"]Build Cache Optimization

Leveraging BuildKit Features

# syntax=docker/dockerfile:1

FROM python:3.11-alpine

# Cache mount for pip

RUN --mount=type=cache,target=/root/.cache/pip \

pip install -r requirements.txt

# Cache mount for apt

RUN --mount=type=cache,target=/var/cache/apt \

--mount=type=cache,target=/var/lib/apt \

apt-get update && \

apt-get install -y package-nameOptimizing Layer Order

# Dependencies first (changes less frequently)

COPY package*.json ./

RUN npm ci --only=production

# Application code last (changes frequently)

COPY . .

RUN npm run buildPerformance Enhancement Strategies

Using Docker Slim Toolkit

The Docker Slim toolkit can automatically reduce image size by up to 90%:

# Install docker-slim

curl -sL https://raw.githubusercontent.com/docker-slim/docker-slim/master/scripts/install-dockerslim.sh | sudo -E bash -

# Optimize existing image

docker-slim build --target your-app:latest --tag your-app:slimImage Squashing

# Build with squash flag (experimental)

docker build --squash -t optimized-image .

# Or use docker-squash tool

docker-squash optimized-image:latest -t optimized-image:squashedParallel Build Optimization

# syntax=docker/dockerfile:1

FROM alpine AS base

RUN apk add --no-cache ca-certificates

# Parallel build stages

FROM base AS deps

COPY requirements.txt .

RUN pip install -r requirements.txt

FROM base AS app

COPY app/ ./app/

# Final merge

FROM base AS final

COPY --from=deps /usr/local/lib/python3.11/site-packages/ /usr/local/lib/python3.11/site-packages/

COPY --from=app /app ./app

CMD ["python", "app/main.py"]Real-World Optimization Example

Here’s a complete before-and-after optimization demonstrating an 81% size reduction:

Before Optimization (1.29GB)

FROM node:20

WORKDIR /app

COPY . .

RUN npm install

RUN npm run build

EXPOSE 3000

CMD ["npm", "start"]After Optimization (256MB)

# syntax=docker/dockerfile:1

FROM node:20-alpine AS deps

WORKDIR /app

COPY package*.json ./

RUN npm ci --only=production --no-audit --no-fund && \

npm cache clean --force

FROM node:20-alpine AS builder

WORKDIR /app

COPY package*.json ./

RUN npm ci --include=dev --no-audit --no-fund

COPY . .

RUN npm run build && \

npm prune --production

FROM node:20-alpine AS runtime

RUN addgroup -g 1001 -S nodejs && \

adduser -S nodejs -u 1001

WORKDIR /app

COPY --from=builder --chown=nodejs:nodejs /app/dist ./dist

COPY --from=deps --chown=nodejs:nodejs /app/node_modules ./node_modules

COPY --chown=nodejs:nodejs package.json ./

USER nodejs

EXPOSE 3000

CMD ["node", "dist/server.js"]Advanced Optimization Tools

Dive: Layer Analysis Tool

# Analyze image layers

docker run --rm -it \

-v /var/run/docker.sock:/var/run/docker.sock \

wagoodman/dive:latest your-image:tagContainer Structure Tests

# structure-test.yaml

schemaVersion: '2.0.0'

fileExistenceTests:

- name: 'application binary'

path: '/usr/local/bin/app'

shouldExist: true

fileContentTests:

- name: 'non-root user'

path: '/etc/passwd'

expectedContents: ['nodejs:x:1001:1001::/home/nodejs:/bin/sh']Troubleshooting Common Issues

Alpine Compatibility Problems

# Fix musl vs glibc issues

FROM alpine:latest

RUN apk add --no-cache gcompat

COPY --from=builder /app/binary /usr/local/bin/appMissing SSL Certificates

FROM alpine:latest

RUN apk add --no-cache ca-certificates

COPY --from=builder /app/binary /usr/local/bin/appDebugging Slim Images

# Multi-stage debugging approach

FROM alpine:latest AS debug

RUN apk add --no-cache curl bash htop

COPY --from=builder /app/binary /usr/local/bin/app

FROM alpine:latest AS production

COPY --from=builder /app/binary /usr/local/bin/appMonitoring and Metrics

Image Size Tracking

# Track image sizes over time

docker images --format "table {{.Repository}}\t{{.Tag}}\t{{.Size}}" |

sort -k3 -h

# Compare image efficiency

docker history --no-trunc your-image:tagPerformance Monitoring

# Container resource usage

docker stats your-container

# Startup time measurement

time docker run --rm your-image:tag echo "Container started"Conclusion

Docker image optimization is a critical skill that directly impacts deployment speed, resource costs, and security posture. By implementing multi-stage builds, choosing appropriate base images, optimizing layers, and following security best practices, you can achieve dramatic size reductions while improving performance.

Key takeaways for effective optimization:

- Start with minimal base images like Alpine or distroless

- Use multi-stage builds to separate build and runtime dependencies

- Consolidate RUN commands and clean up artifacts in the same layer

- Leverage BuildKit features for advanced caching strategies

- Implement security best practices with non-root users

- Use specialized tools like Docker Slim for automated optimization

- Monitor and measure optimization results continuously

Remember that optimization is an iterative process. Start with the most impactful changes like base image selection and multi-stage builds, then progressively implement more advanced techniques based on your specific requirements and constraints.